Blog / Events / Journalists

AI literacy, training, transparency and guidelines: Advice from the JournalismAI Festival

Since the release of ChatGPT in November 2022, artificial intelligence (AI) has become a hot topic in the media. Unsurprisingly, it’s been one of the best performing keywords on the Journalist Enquiry Service, as journalists ask for expert comment and information on the subject from PRs.

AI itself has been used by journalists and newsrooms for content generation and assistance for a while now, evidenced by the fact this year was the fourth edition of the JournalismAI Festival. Here are some of the key talking points this year, including common misconceptions about AI, its impact on election coverage and photojournalism, and what the future holds for the technology and the media industry.

Transparency and trust

Public trust in the media hasn’t been particularly high for a number of years now. This hasn’t been helped by instances like deepfakes in elections and images and video being altered. Speaking at the festival, Nick Diakopoulous, professor of computational journalism at Northwestern University, said that ‘the public are now more critical and distrustful than ever before’.

AI, at the moment, is not helping to improve trust. Ezra Eeman, strategy and innovation director at NPO, explained that ‘labeling [stating that an article was produced with AI] actually lowers trust’. However, Chris Moran, head of editorial innovation at The Guardian, believes this will change over time as more explanation and information becomes available on how it is used.

Transparency will therefore be key to rebuilding that trust. Dr David Campbell, education director at the VII Foundation, spoke of Adobe adopting protocols about images so the public can see how things have been produced: ‘It’s a digital trail to give evidence’. When it comes to reporting on election coverage, Andrew Dufield, head of AI at Full Fact, said ‘fact checkers need the access to the best technology to be able to do their job properly and provide clarity’. The more that journalists can show the public how the process is working and that humans are not out of the loop in creating content, the more likely they are to trust and believe in what they’re seeing, reading, or watching.

Training, AI literacy, and implementing guidelines

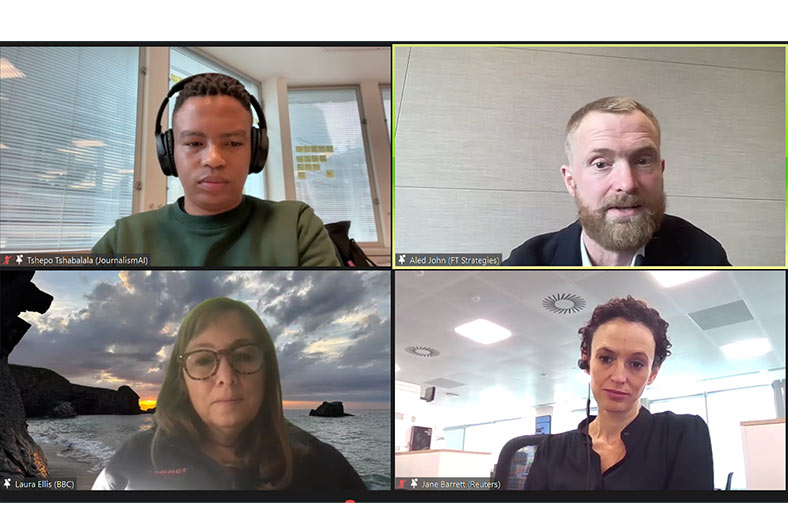

There have been a lot of misconceptions around AI replacing journalists’ jobs but Aled John, interim managing director at FT Strategies, stated that ‘technology is there to enable journalism rather than replace it’. However, as with any new technology, journalists will need to learn how to use it. Jane Barrett, global editor for media news strategy at Reuters, said ‘you have to train and understand how to use these different pieces of AI and language learning models (LLM’s)’.

Aled also pointed out that they are not fact machines and are reliant on good data sets.

In order to get good data sets, Laura Ellis, head of technology forecasting at the BBC, said it’s important to get ‘good enterprise deals to avoid data breaches or security issues. It needs a secure environment’. There is also a need for AI literacy, not just for journalists but for the whole newsroom. This is why when it comes to setting guidelines, there is a need for a cross functional team to help establish it. This has been done at Reuters, the BBC, and FT strategies.

Hannes Cools, researcher at the AI, Media and Democracy Lab, has spent the last few months looking at 21 guidelines for AI in newsrooms from across the world. The main two points that were in all the news organisations’ guidelines were that they want to be transparent when AI has been used, with an emphasis on human oversight in the process. He also came to the conclusion that when drafting guidelines, media companies should establish a diverse group and adopt a risk assessment approach.

AI now vs AI in the future

While ChatGPT has only been around for a year, AI and LLM’s have been assisting newsrooms for much longer to enhance the content creation process. Aled said it’s already helping them with headline generation, automated tagging, large scale fact checking, recommendation engines, and improving ads. Jane has used an LLM as a brainstorming place for story ideas, helping with inspiration for COP28 articles, while Laura has used AI to help write stories for different audiences.

This technology will only become faster and more sophisticated moving forward. In photojournalism, David believes there is a ‘small space for visualising things we cannot photograph’. While in election reporting and fact checking, AI technology can help to identify what is authentic and what is not. It can also, in Nick’s opinion, be used for satire. ‘I think there’s so much potential for political satire with generative AI. Not to deceive viewers, but to convey political criticism, like any kind of political cartoon’.

Sannuta Raghu, AI working group lead at Scroll Media, works at a small news organisation that has built trust with its audience. AI gives organsations the potential to scale up, but the models being built must be in line with the values of each news organisation, advises Ezra. Ultimately, Jane believes that AI will save journalists time and make people’s lives better.